robots.txt 2.0 review

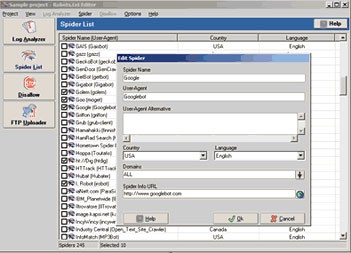

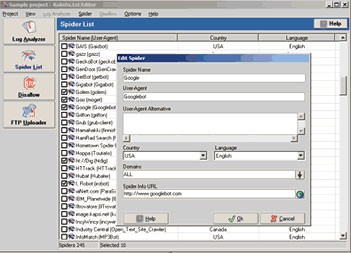

Downloadrobots.txt is a script in PHP that acts like a normal robots.txt file, but with a few differences. When a Spider attempts to access

|

|

robots.txt is a script in PHP that acts like a normal robots.txt file, but with a few differences.

When a Spider attempts to access robots.txt, the script will "disallow" access to a list of pre-defined directories. When a normal user attempts to access robots.txt, it will "disallow" access to all directories.

This is a security precuation and an attempt to prevent users from finding "hidden" directories by looking at the robots.txt file.

What's New in This Release:

The two serious bugs that meant robots.txt did not function properly were fixed.

A bug that meant the data printed back would not be parseable by Web crawlers was fixed.

A new function to clean up data from the files was added.

robots.txt 2.0 keywords